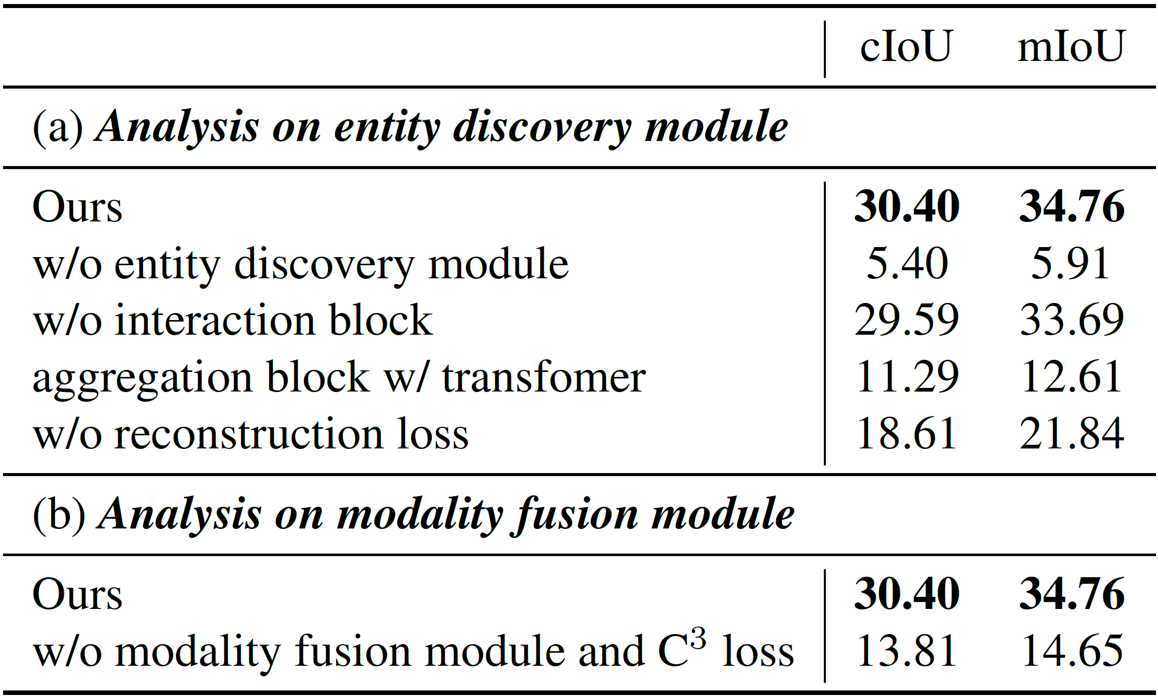

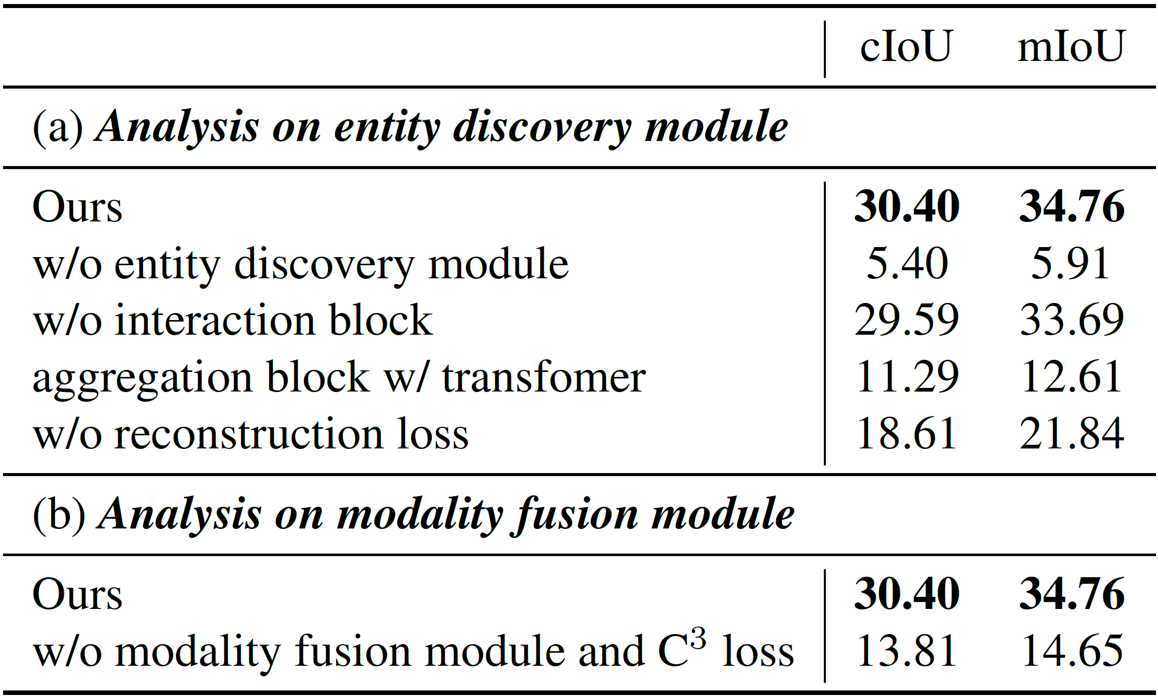

Ablation Study

Ablation studies of model components and loss function on RefCOCO val set.

Referring image segmentation, the task of segmenting any arbitrary entities described in free-form texts, opens up a variety of vision applications. However, manual labeling of training data for this task is prohibitively costly, leading to lack of labeled data for training. We address this issue by a weakly supervised learning approach using text descriptions of training images as the only source of supervision.

To this end, we first present a new model that discovers semantic entities in input image and then combines such entities relevant to text query to predict the mask of the referent. We also present a new loss function that allows the model to be trained without any further supervision. Our method was evaluated on four public benchmarks for referring image segmentation, where it clearly outperformed the existing method for the same task and recent open-vocabulary segmentation models on all the benchmarks.

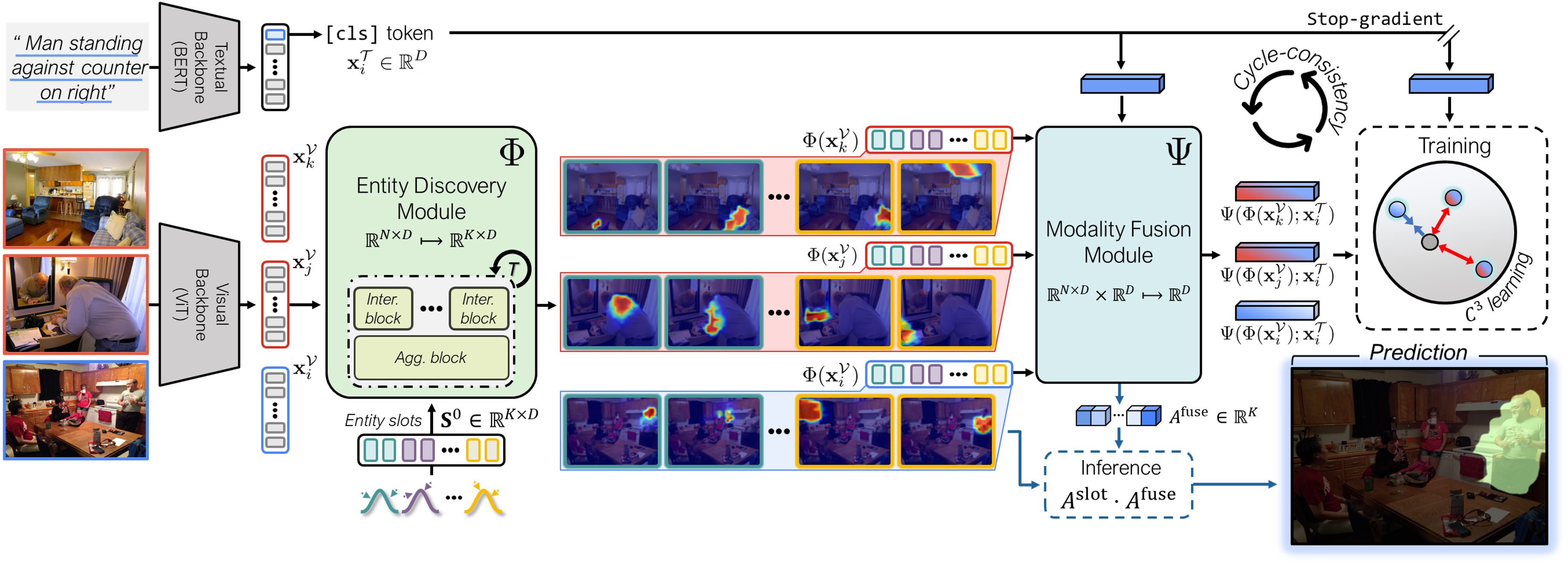

Illustration of the overall architecture of our model along with its behavior during training and inference.

(a) Feature extractors: The visual and textual features are extracted by transformer-based encoders of the two

modalities respectively.

(b) Entity discovery module: A set of visual entities are discovered from the visual features through the bottom-up attention.

(c) Modality fusion module: The visual entities and a referring expression are fused considering their relevance,

which is estimated by the top-down attention.

Our model is trained with the proposed contrastive cycle-consistency (C$^3$) loss between the cross-modal embeddings and textual features.

In inference, the segmentation mask is predicted by jointly considering the visual entities $A^{\text{slot}}$ from the entity discovery module

and the relevance scores $A^{\text{fuse}}$ from the modality fusion module.

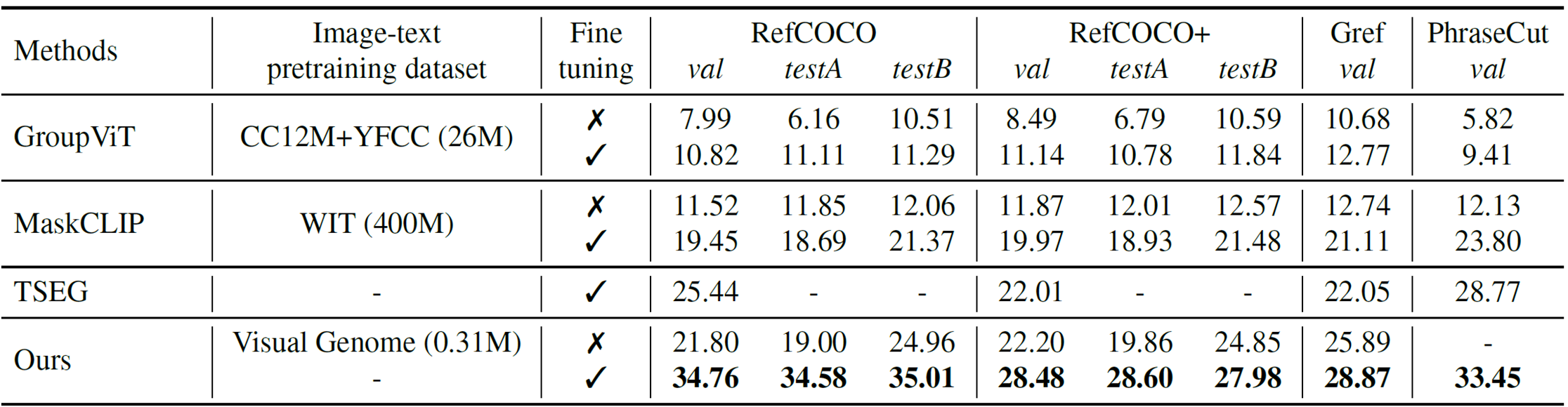

Comparison with weakly-supervised method (TSEG) and open-vocabulary segmentation method (GroupViT and MaskCLIP). The results on four datasets are reported in mIoU ($\%$). Fine-tuning \cmark means that the model is trained with the image-text pairs of the target benchmark; otherwise, it just relies on the pre-trained weight without further training.

Ablation studies of model components and loss function on RefCOCO val set.

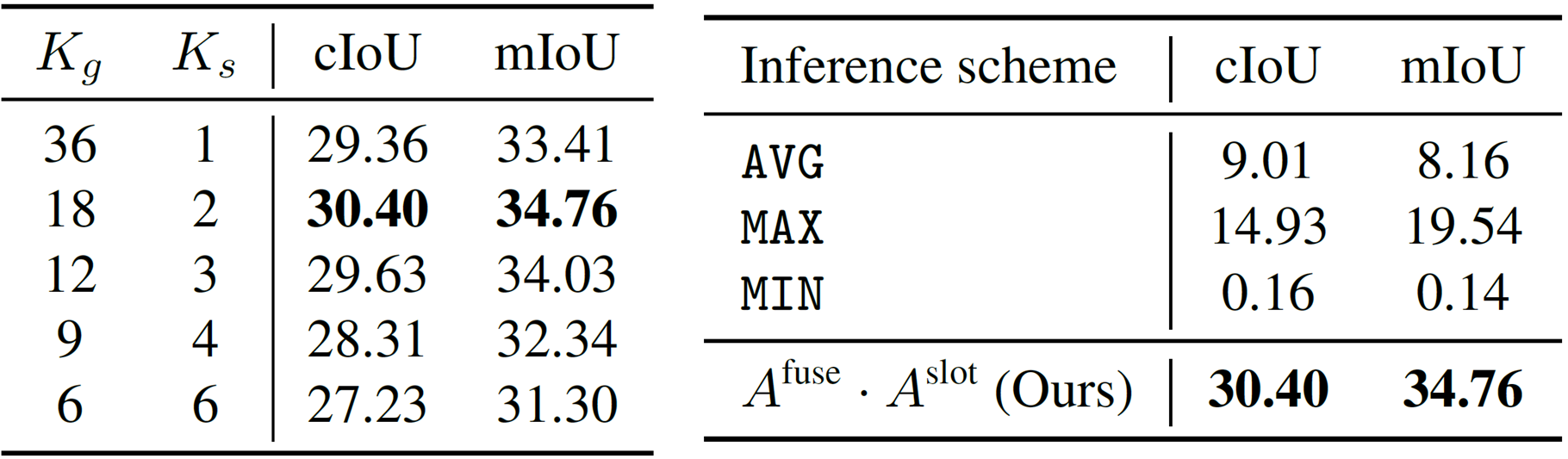

Impact of hyperparameters $K_g$ and $K_s$ (Left) and inference schemes (Right) on RefCOCO val set.

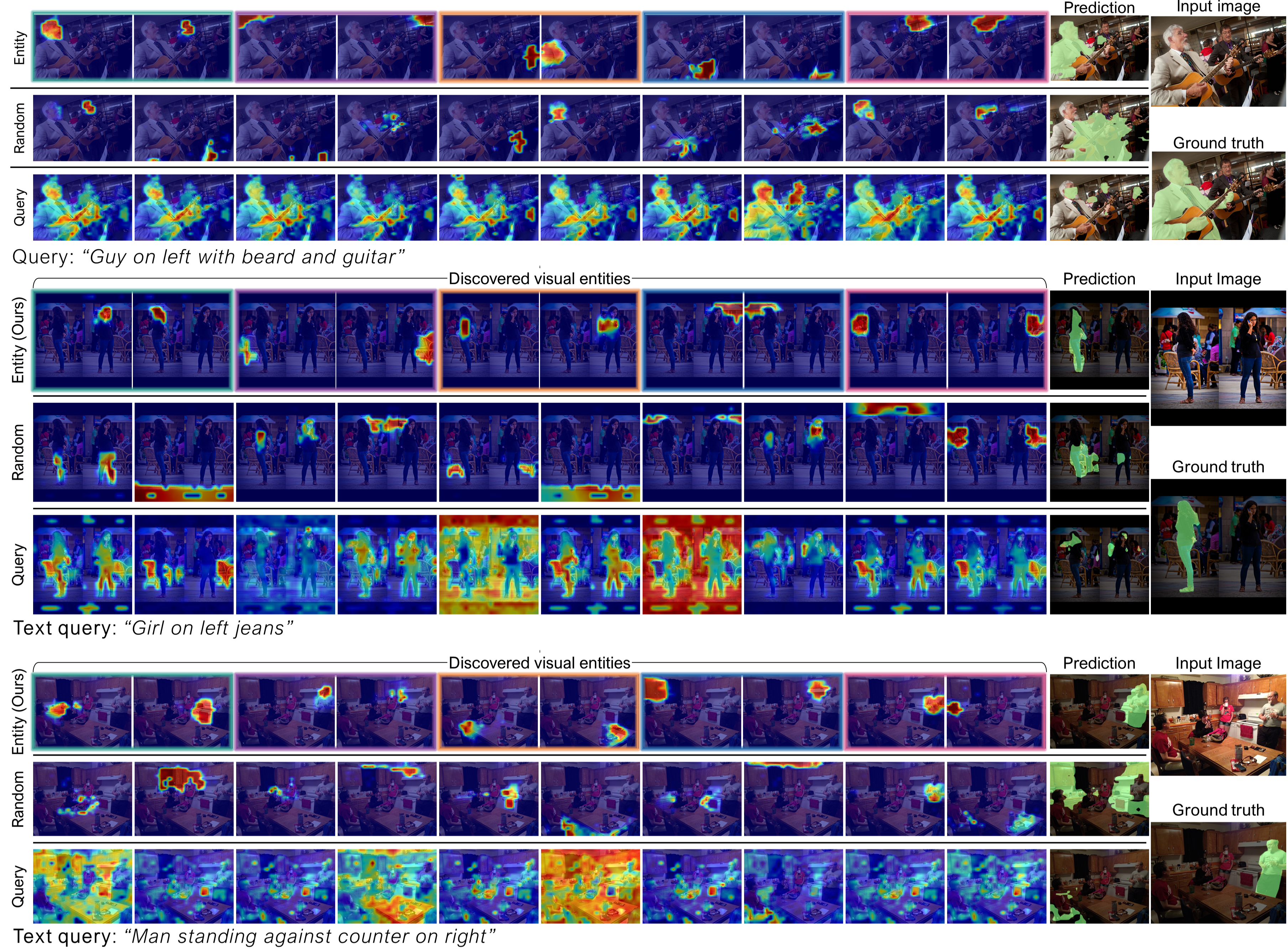

Visualization of visual entities discovered using each slot type

Qualitative results of our framework with entity slot (Ours), random slot, and query slot on RefCOCO val set.

In the case of entity slots, the boundary color indicates the Gaussian distribution that each slot is sampled from.

For each slot type, we present 10 discovered entities from $A^\text{slot}$ and the final predictions.

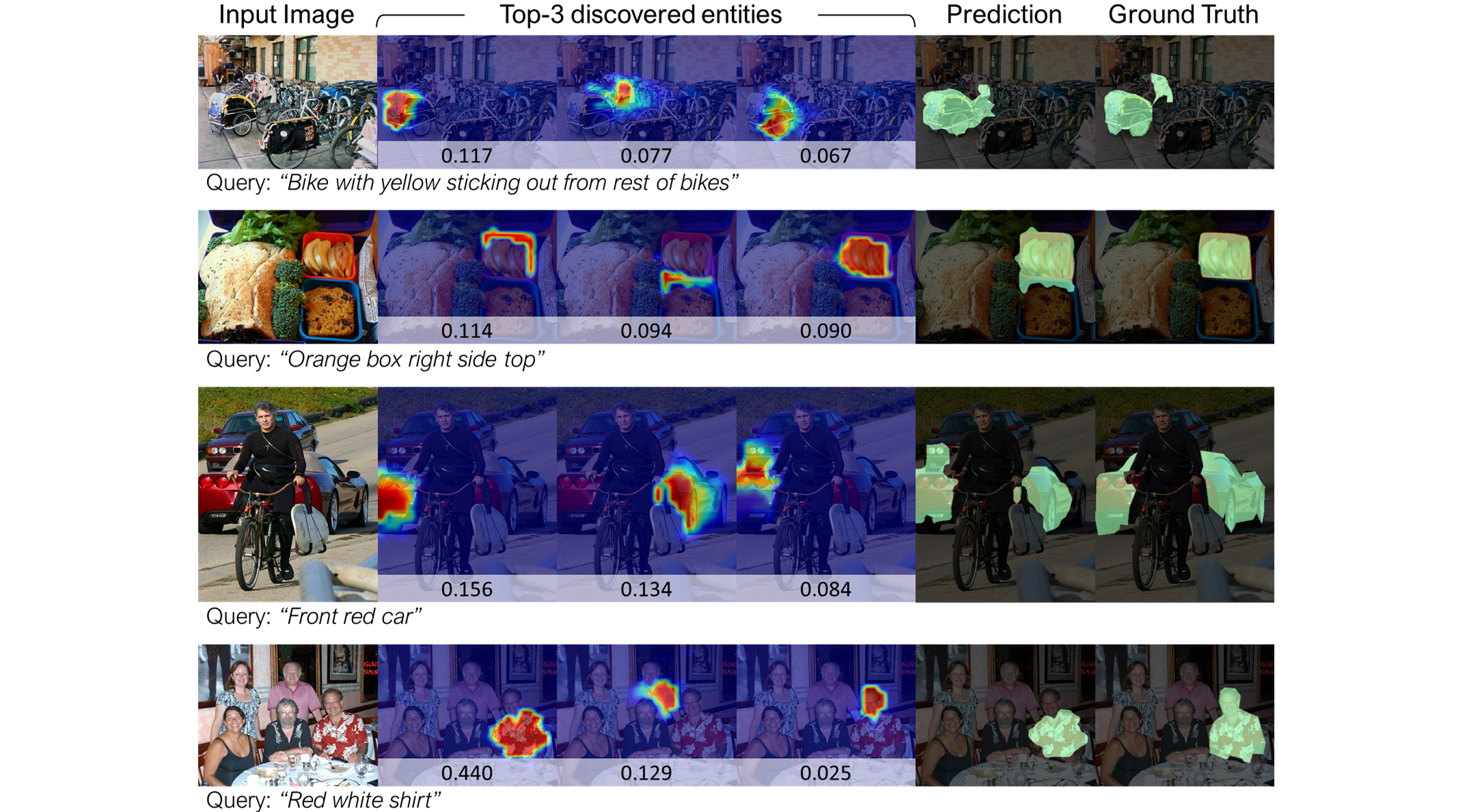

Qualitative results of our framework

We present the discovered entities from $A^\text{slot}$ and their relevance scores from $A^\text{slot}$. Top-3 entities in terms of relevance to query expression are presented.

@inproceedings{kim2023shatter,

title={Shatter and Gather: Learning Referring Image Segmentation with Text Supervision},

author={Kim, Dongwon and Kim, Namyup and Lan, Cuiling and Kwak, Suha},

journal={Proceedings of the IEEE/CVF International Conference on Computer Vision},

year={2023},

}